Automating Mixed-Reality Capture

Defining the interaction model for machine perception and automated environment scanning on Quest.

01 // The Challenge

Designing for Imperfect Eyes

Mixed Reality (MR) depends on the headset understanding the physics of the room. Early Quest devices relied on users manually "drawing" their walls—a high-friction process that resulted in poor data.

The business goal was "Automated Scanning," but the technology wasn't ready. The computer vision (SLAM) teams were struggling with reliability. They needed a design strategy that could handle the ambiguity of the real world: open floor plans, messy rooms, and occlusion.

02 // The System Model

The Hybrid Capture Model

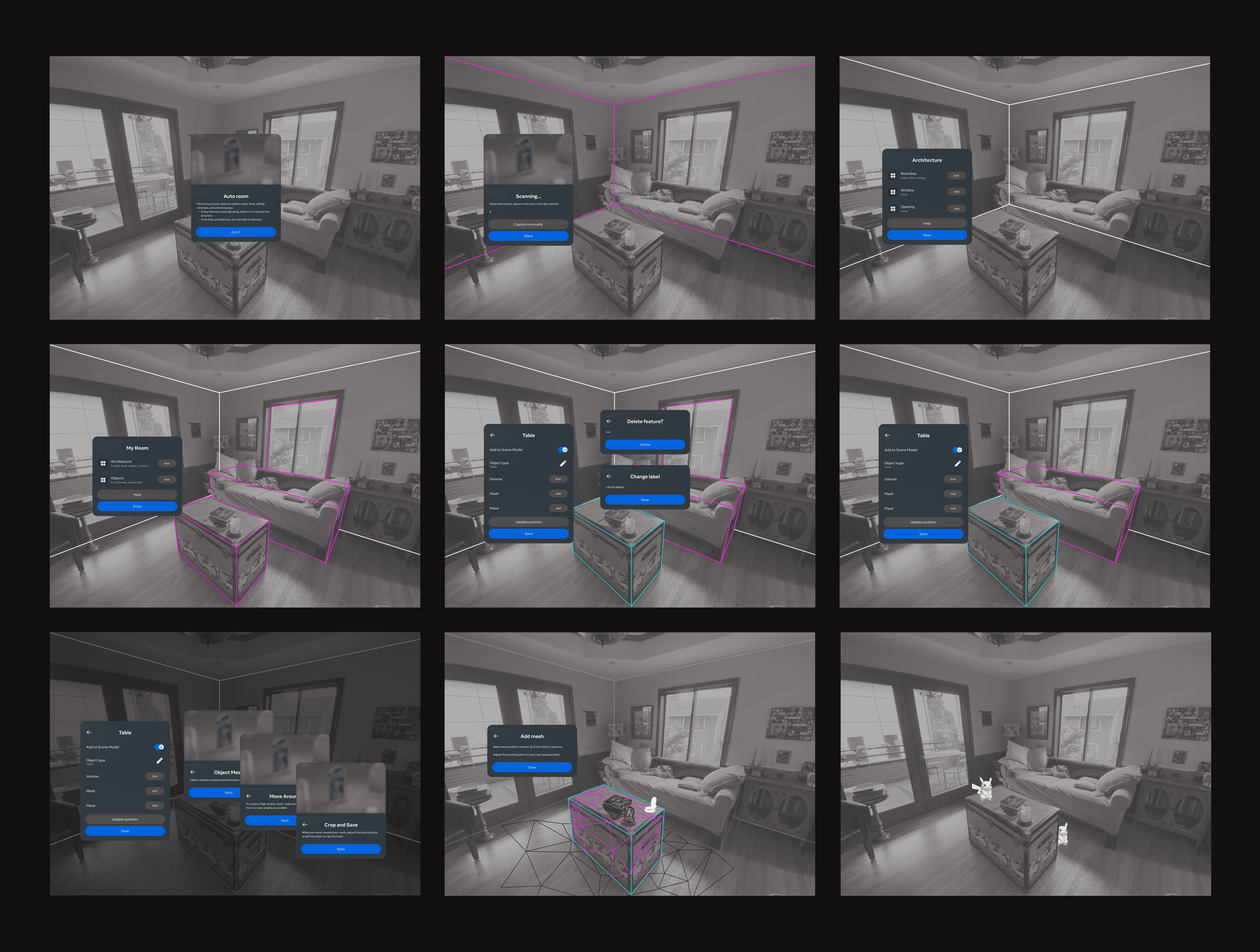

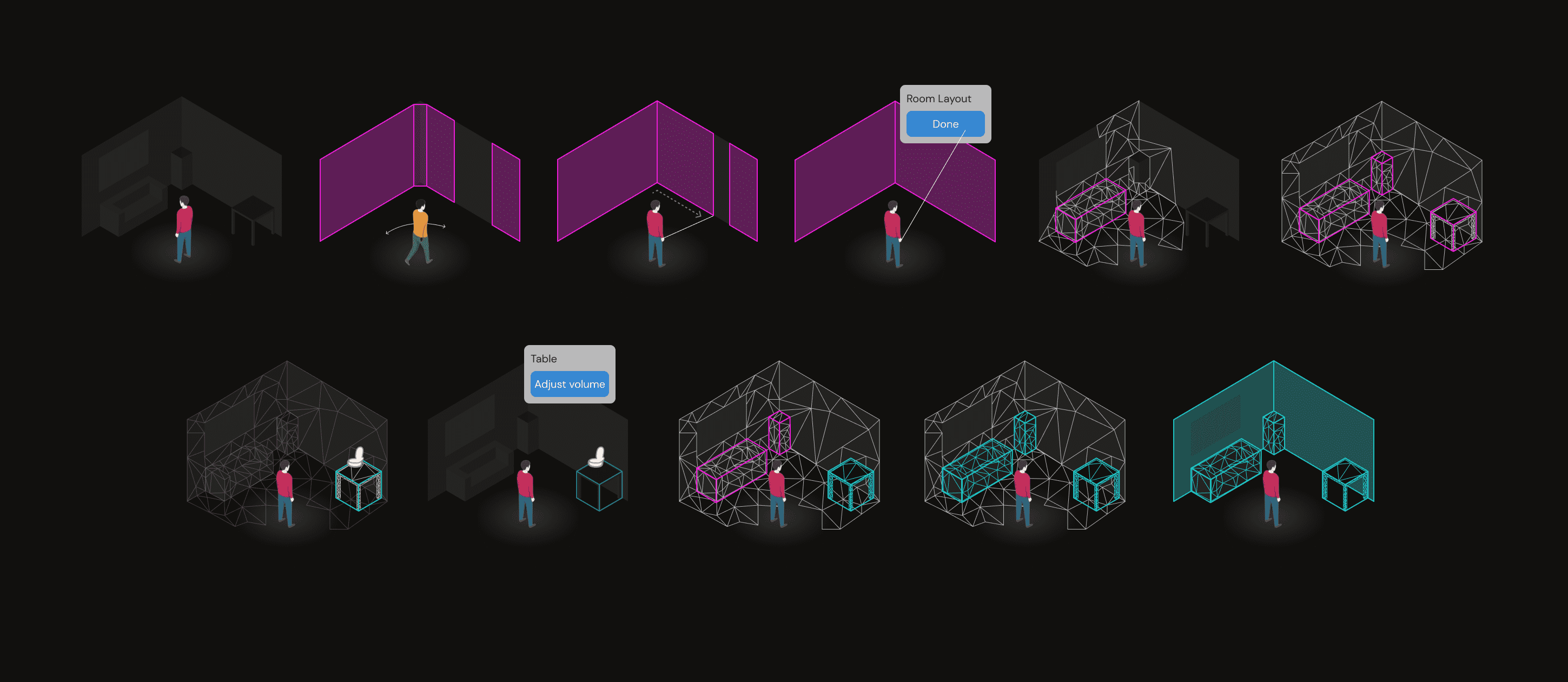

"We couldn't rely on full automation because the tech wasn't mature. I defined a 'Human-in-the-Loop' model: The machine suggests the geometry, and the human confirms or corrects it."

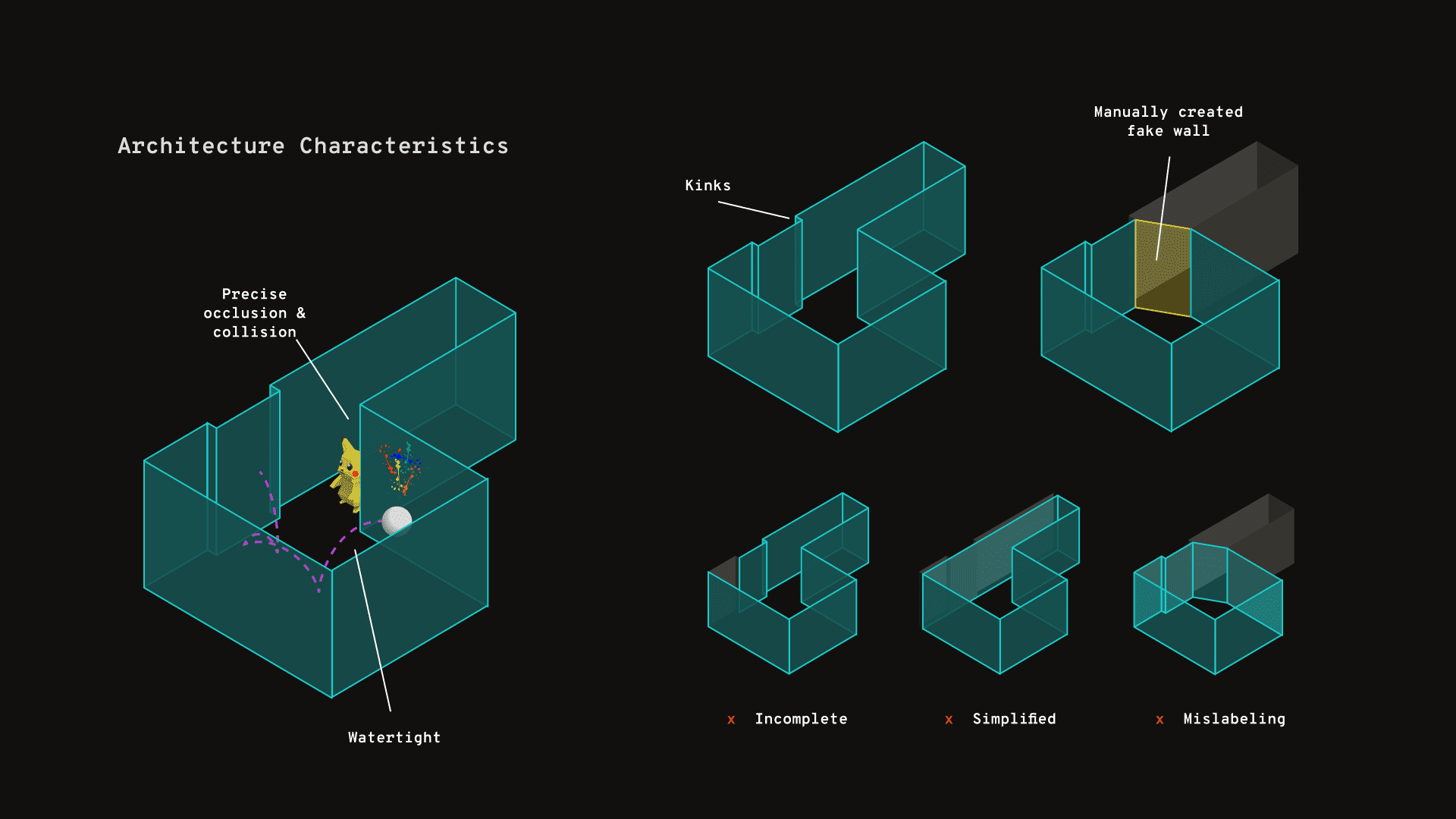

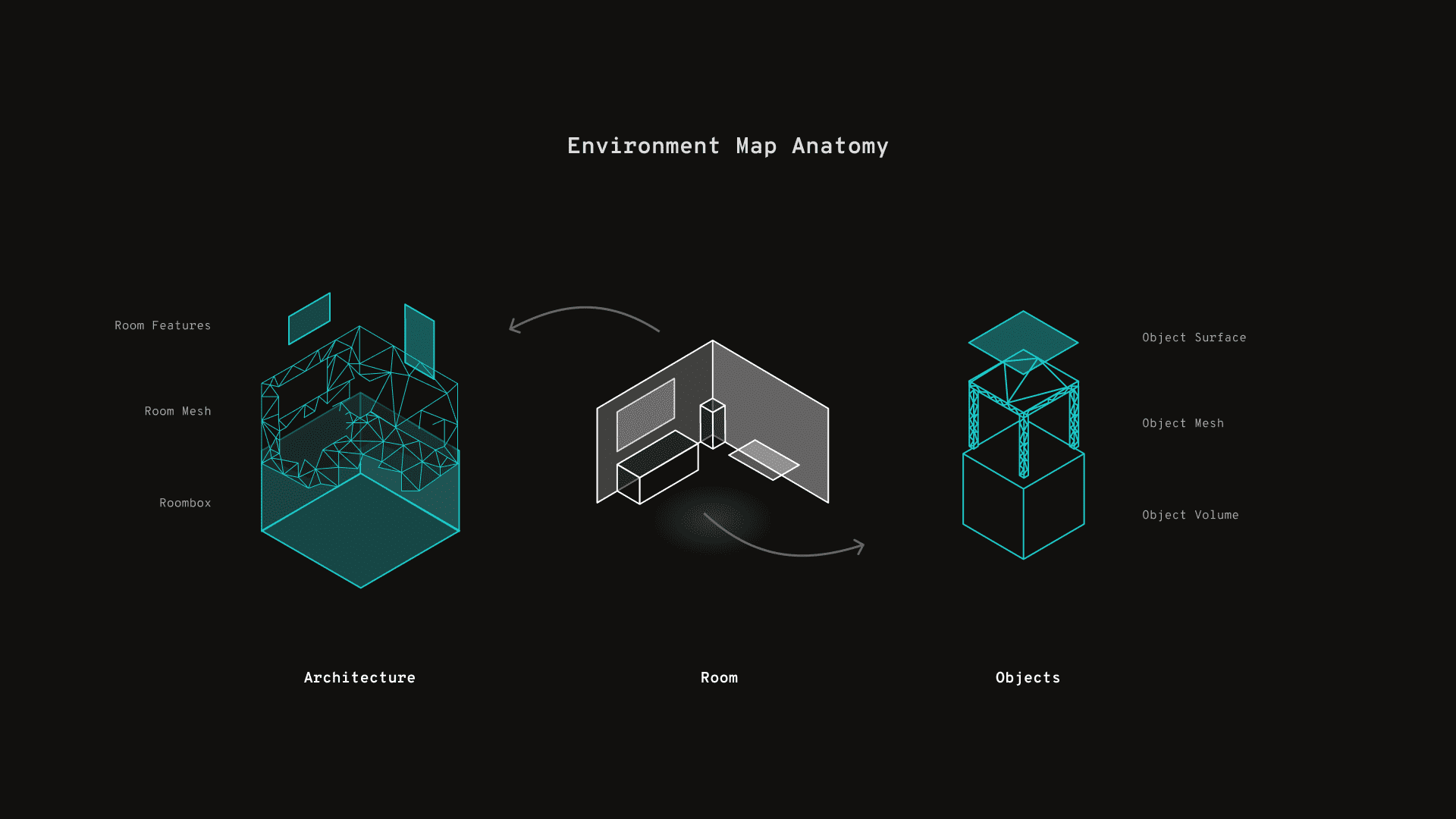

I broke the capture process down into four distinct primitives, allowing us to mix and match manual vs. automated steps depending on the hardware generation:

- Room Layout: (Walls, Ceiling, Floor)

- Architectural Features: (Windows, Doors)

- Object Volumes: (Couches, Tables - for collision)

- Object Meshes: (High fidelity visuals)

Designing for Failure

The most critical part of this work was not the "Happy Path," but the "Correction Path." What happens when the headset misses a wall? What happens if it thinks a couch is a table?

I designed a comprehensive fallback logic that allowed users to seamlessly take over when the algorithms failed.

03 // The Impact

From Experiment to SDK

This work moved environment capture from a research demo to a shipping platform capability.

- The Meta Spatial Scanner: The model I defined (Room → Planes → Objects) became the reference implementation for the Meta Spatial Scanner, now a core part of the Quest OS.

- Developer Standard: This framework is now embedded in the Spatial SDK, defining how thousands of third-party developers interact with user environments.

- Unblocking Progression: By providing an experieence that could handle "noisy" data, we enabled progression before the machine learning models were 100% perfect, ensuring Quest 3 could be launched on time.